Inside the Cameron County Detention Center, in Brownsville, Texas, inmates wearing orange jumpsuits peer from behind the glass of five group holding cells. The jail is about a twelve-minute drive from the banks of the winding Rio Grande, which marks the US–Mexico border. Facing issues like violence, economic pressure, and climate change, and looking for a better life, thousands of migrants cross the southern US border every month. If they’re arrested and don’t have documentation, the US deports them.

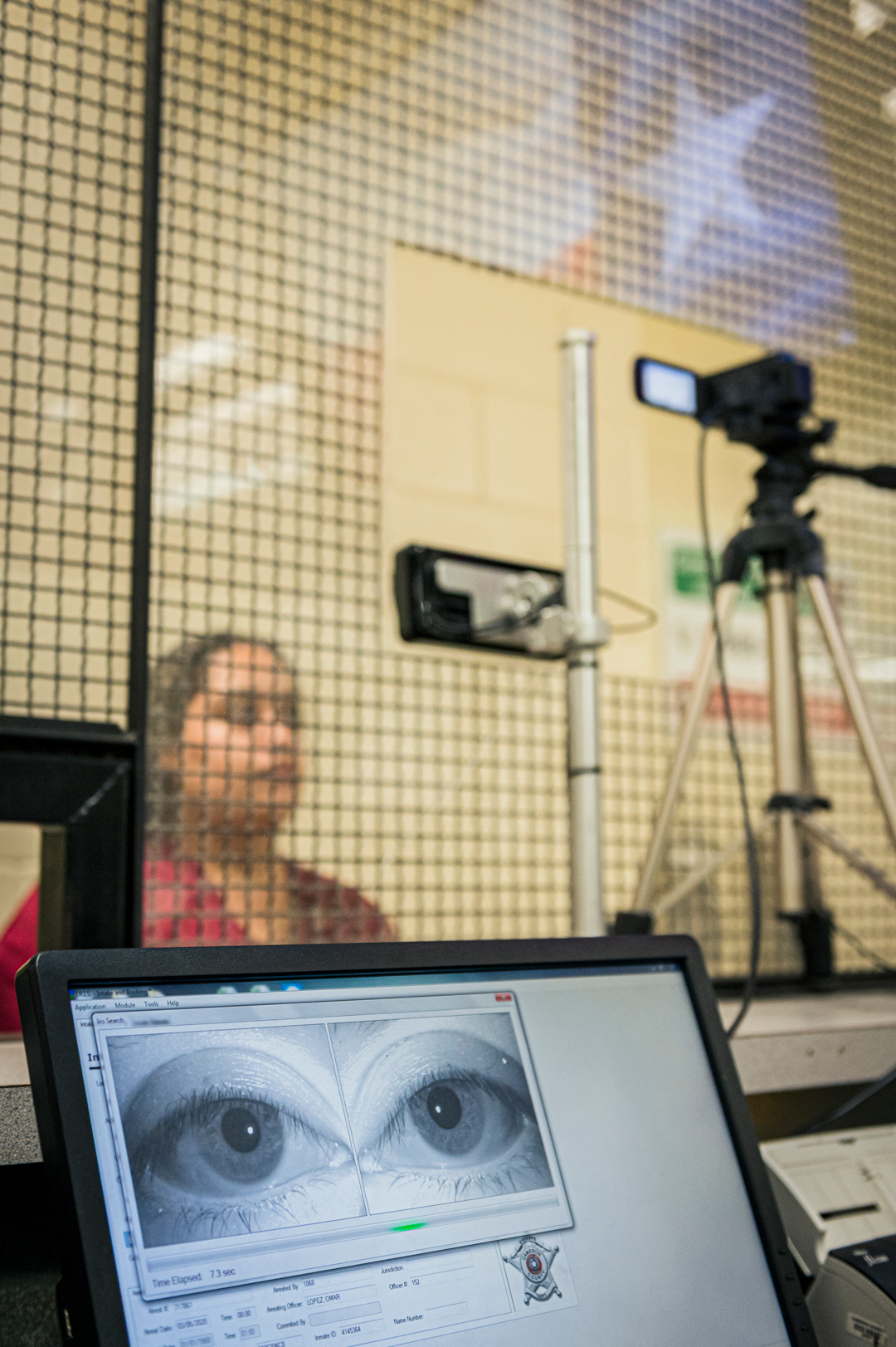

Every day around noon, people who were arrested the previous day and held at local city jails overnight are bused here and booked into the system. Guards fingerprint them, take their mugshots, and since 2017, they take one additional step: they scan their eyes into the Inmate Identification and Recognition System (IRIS). Developed and patented by Biometric Intelligence and Identification Technologies, or BI2 Technologies, a privately held corporation head-quartered in Plymouth, Massachusetts, IRIS is more accurate and faster than fingerprinting—identifying inmates in approximately eight seconds or less.

Kassandra Flores is wearing a state-issued boxy maroon top, matching baggy pants too long for her legs, and beige sandals. She stands up straight with her arms at her sides and her toes at the edge of a piece of black and yellow tape on the tile floor. A guard tells her to look directly into the lens of a camera that will capture a high-resolution image of her irises so an algorithm can compare it against a vast, ever-growing database owned by law enforcement. The image will be stored there indefinitely, unless a judge orders the record expunged, and shared with law enforcement agencies across the country, including the FBI. Individual law enforcement agencies can also choose to share the data with Immigration and Customs Enforcement (ICE).

Cameron County’s use of this technology does not stem from state or federal regulations, statutes, or protocols—it is not part of any broader official policy. It was, rather, introduced at the behest of local officials. Omar Lucio, who was sheriff at the time I visited last spring, was the first along the US-Mexico border to start using IRIS. Another thirty soon followed suit. That success, along with its popularity with other US law enforcement agencies, allowed BI2 Technologies to expand and ink a deal in June 2019 to make the technology available to more than 3,000 sheriffs across the US.

BI2 Technologies first started out developing iris scanning as a tool to identify missing children. CEO and cofounder Sean Mullin tells me that law enforcement officials immediately started suggesting new uses for the technology; the next step was to expand to locate missing seniors. Today, BI2 Technologies also sells an iOS and Android app called MORIS that allows for mobile access to IRIS, and a revenue-generating background-check system for sheriffs’ offices.

I volunteered to interact with IRIS to better understand how it works. I stood facing a mesh wall that separated me from a guard sitting behind a large screen. He adjusted the black camera to my eye height. My eyes were reflected in a thin, rectangular mirror above the lens. Red lights flashed on either side of the lens, then another light flashed green. A monitor typically available only to the jail staff showed two close-up greyscale photos of my eyes. The images had a cold, unhuman quality, like an X-ray.

Similar to facial-recognition technology, BI2 Technologies’ algorithm measures and analyzes the unique features of a person’s irises and checks them against the database. Mullin says that, because the human eye does not change over time the way a face does as it ages, iris scanning is the more accurate biometric tool. When the system finds a match, a person’s profile, including any mugshots and criminal history, flashes onto the screen. The algorithm tries to find my irises but doesn’t turn up anything. Still, my mind races with questions about where my eyes could have ended up: BI2 Technologies’ system feeds into a government database of information gathered from many sources in many places, not just Cameron County (that’s why it can find matches with records in other jurisdictions). The FBI? Homeland Security? Joe Elizardi, the lieutenant in charge of the jail’s booking and intake, assures me that my eye scans will not be kept in their system once this demonstration is over.

In recent years, and whether we realize it or not, biometric technologies such as face and iris recognition have crept into every facet of our lives. These technologies link people who would otherwise have public anonymity to detailed profiles of information about them, kept by everything from security companies to financial institutions. They are used to screen CCTV camera footage, for keyless entry in apartment buildings, and even in contactless banking. And now, increasingly, algorithms designed to recognize us are being used in border control. Canada has been researching and piloting facial recognition at our borders for a few years, but—at least based on publicly available information—we haven’t yet implemented it on as large a scale as the US has. Examining how these technologies are being used and how quickly they are proliferating at the southern US border is perhaps our best way of getting a glimpse of what may be in our own future—especially given that any American adoption of technology shapes not only Canada–US travel but, as the world learned after 9/11, international travel protocols.

As in the US, the use of new technologies in border control is under-regulated in Canada, human rights experts say—and even law enforcement officials acknowledge that technology isn’t always covered within the scope of existing legislation. Disclosure of its use also varies from spotty to nonexistent. The departments and agencies that use AI, facial verification, and facial comparison in border control—the Canada Border Services Agency (CBSA) and Immigration, Refugees, and Citizenship Canada (IRCC)—are a black box. Journalists and academics have filed access to information requests to learn more about these practices but have found their efforts blocked or delayed indefinitely.

These powerful technologies can fly under the radar by design and often begin as pilot projects in both Canada and the US; as they become normalized, they rapidly expand. By keeping their implementation from public view, governments put lawyers, journalists, migrants, and the wider public on the back foot in the fight for privacy rights. For companies developing these tools, it’s a new gold rush.

The end goal of facial recognition at borders is to replace other travel documents — essentially, “Your face will be your passport.”

The US has gathered biometric records of foreign nationals—including Canadians—as part of its entry/exit data system since 2004. Its Customs and Border Protection agency (CBP) is currently testing and deploying facial recognition across air, sea, and land travel. As of last May, over 7 million passengers departing the US by air had been biometrically verified with a facial-matching algorithm, the Traveler Verification Service.

By the end of last year, CBP had facial-comparison technology in use at twenty-seven locations, including fourteen ports of entry. A few days before I arrived in the US, one of these had been installed at the port of entry I was visiting. A sign disclosed this—sort of. It didn’t use the words “facial recognition” and had a far more standard-sounding description (“CBP is taking photographs of travelers entering the United States in order to verify your identity”). Way at the bottom, the sign indicated that US citizens could opt out. A majority of Canadians apparently have the choice to opt out as well; nobody advised me of this. The thing about these new technological screening systems is that, if you don’t have a choice or aren’t aware that you have one, they quickly become routine.

In 2019, there were about 30 million refugees and asylum seekers on the move worldwide, according to the UNCHR. Despite COVID-19’s temporary slowdown of border crossings around the world, global migration is projected to rise for decades due to conflict and climate change. International borders are spaces of reduced privacy expectation, making it difficult or impossible for people to retain privacy rights as they cross. That makes these areas ripe for experimentation with new surveillance technologies, and it means business is booming for tech companies. According to a July 2020 US Government Accountability Office report, from 2016 to 2019, the global facial-recognition market generated $3 to $5 billion (US) in revenue, and from 2022 to 2024, that revenue is projected to grow to $7 to $10 billion (US).

With increased demand and lack of regulation, more surveillance is appearing at international borders each day. In Jordan, refugees must have their irises scanned to receive monthly financial aid. Along the Mediterranean, the European Border and Coast Guard Agency has tested drone surveillance. Hungary, Latvia, and Greece piloted a faulty system called iBorderCtrl to scan people’s faces for signs of lying before they’re referred to a human border officer; it is unclear whether it will become more widespread or is still being used.

Canada has tested a “deception-detection system,” similar to iBorderCtrl, called the Automated Virtual Agent for Truth Assessment in Real Time, or AVATAR. Canada Border Services Agency employees tested AVATAR in March 2016. Eighty-two volunteers from government agencies and academic partners took part in the experiment, with half of them playing “imposters” and “smugglers,” which the study labelled “liars,” and the other half playing innocent travellers, referred to as “non-liars.” The system’s sensors recorded more than a million biometric and nonbiometric measurements for each person and spat out an assessment of guilt or innocence. The test showed that AVATAR was “better than a random guess” and better than humans at detecting “liars.” However, the study concluded, “results of this experiment may not represent real world results.” The report recommended “further testing in a variety of border control applications.” (A CBSA spokesperson told me the agency has not tested AVATAR beyond the 2018 report and is not currently considering using it on actual travellers.)

Canada is already using artificial intelligence to screen visa applications in what some observers, including the University of Toronto’s Citizen Lab research group, say is a possible breach of human rights. In 2018, Immigration, Refugees, and Citizenship Canada launched two pilot projects to help officers triage online Temporary Resident Visa applications from China and India. When I asked about the department’s use of AI, an IRCC spokesperson told me the technology analyzes data and recognizes patterns in applications to help distinguish between routine and complex cases. The former are put in a stream for faster processing while the latter are sent for more thorough review. “All final decisions on each application are made by an independent, well-trained visa officer,” the spokesperson said. “IRCC’s artificial intelligence is not used to render final decisions on visa applications.” IRCC says it is assessing the success of these pilot projects before it considers expanding their use. But, according to a September 2020 report by the University of Ottawa’s Canadian Internet Policy and Public Interest Clinic (CIPPIC), when governments use AI to screen applications, “false negatives can cast suspicion on asylum seekers, undermining their claims.” In a world first, the UK’s Home Office recently suspended its use of an AI tool in its visa-screening system following a legal complaint raising concerns about discrimination.

The end goal of facial recognition at borders, CIPPIC says, is for the technology to replace other travel documents—essentially, “Your face will be your passport.” This year, Canada and the Netherlands, along with consulting behemoth Accenture and the World Economic Forum (the NGO that runs the glitzy annual Davos conference), plans to launch what the group calls “the first ever passport-free pilot project between the two countries.” Called the Known Traveller Digital Identity, it’s a tech platform that uses facial recognition to identify travellers’ faces and match them to rich digital profiles that have a “trust score” based on a person’s verified information, including from their passport, driver’s licence, credit card, and their interactions with banks, hotels, medical providers, and schools. The program may be voluntary at first, but the CIPPIC warned that, if it is used widely, “it may become effectively infeasible for citizens to opt out.”

Iris and facial recognition fall under biometrics, which the Office of the Privacy Commissioner describes as the automated use of “physical and behavioural attributes, such as facial features, voice patterns … or gait” to identify people. These technologies work like our brains do—we look at a person, our minds process their features, and we check them against our memory. With biometrics, mass amounts of data are captured and stored. There are two parts in the process: enrolment (when the data of a known person is stored in a reference database) and matching (when an algorithm compares a scan of an unknown person against the reference database). The algorithm finds likely matches and returns a result. The bigger and more diverse the database, the more successful the technology should be in returning a match.

In addition to far-ranging privacy concerns, these technologies have been shown to be biased. In one case last January, Detroit police wrongfully arrested a Black man after a facial-recognition algorithm misidentified him. In another case, in 2019, facial recognition mistakenly identified a Brown University student as a suspect in Sri Lanka’s Easter Sunday bombings, which killed more than 250 people.

But, for people with legitimate asylum claims, who are often people of colour, the growth of AI and biometrics in border control is yet another factor preventing them from crossing safely.

These are not random glitches. Studies have shown that facial-recognition algorithms are less accurate in identifying people of colour—an MIT and Stanford University analysis found an error rate of up to 0.8 percent for light-skinned men and up to 34.7 percent for dark-skinned women. The bias comes from the data that’s used to assess the performance of the algorithm: this research also found that the data set one major tech company used to train its algorithm was over 83 percent white and over 77 percent male. The company claimed an accuracy rate of more than 97 percent; according to the CIPPIC’s September 2020 report, even a 98 percent accuracy rate would result in thousands of false outcomes per day if applied to all travellers entering Canada. Almost certainly, based on the algorithms and databases currently available, these errors would be concentrated within certain demographic groups, targeting them for greater suspicion and scrutiny.

If you’ve returned from abroad through Toronto Pearson International Airport in recent years, you’ve interacted with new passport scanners, or Primary Inspection Kiosks, that use facial verification to compare a traveller’s face with their passport. Internal CBSA communications obtained by the CBC through an access to information request suggest that these kiosks may refer people from countries including Iran and Jamaica for secondary inspection at higher rates.

Outside Sheriff Lucio’s office door is a display case of confiscated prison shivs. He welcomes me in and invites me to sit at his conference table, taking his seat at the head, Lieutenant Elizardi sitting to his right. Spanish is Lucio’s first language, and he speaks with an accent you’d find on either side of the border. His family moved to the area now called Texas seven generations ago, from Italy. Lucio sees himself as a trendsetter and, until his term ended in December, was eager to adopt more surveillance tech—at one point during our interview, he says it would be a good idea to implant tracking chips in babies when they’re born, to prevent human trafficking. “Technology changes every day,” Lucio tells me. “If you do not go ahead and go with the times, you stay behind.”

BI2 Technologies approached Lucio and other sheriffs in 2017 to try IRIS for free. Mullin, the CEO, told me the offer was well-intentioned—he believes border sheriffs don’t have the tools they need to do their jobs well. But he also acknowledges that it was a business decision: if the company could demonstrate IRIS was useful at the southern border, it might be adopted more broadly and outpace other iris-identification companies.

Within a few days of setting up the technology, Elizardi caught an alleged violent criminal; Lucio said he had been using fake identities to elude police. “He’s been captured four previous times with no results,” Lucio says. “But, using the iris, we found out he was wanted in Boston, Massachusetts, for human smuggling, narcotics, kidnapping, and murder. How’s that?” When the system identified the wanted man, Elizardi remembers, Cameron County started receiving calls from the FBI, Secret Service, and other police agencies. “We were ecstatic. We were like, Wow, we caught our first one!”

Lucio says it wouldn’t bother him to have his eyes scanned—he was fingerprinted when he first became a police officer. He argues that, if you haven’t done anything wrong, you have nothing to worry about. Lucio explains that he has an expectation of privacy inside his house, inside his bedroom, and for his family and children. When I ask him where he draws the line, he says he wouldn’t want someone tapping his phone or listening to his conversations. He says it’s a good thing that, in the US justice system, you need to have probable cause to get a judge to issue a warrant to tap your phone. “I’m a private person, okay? That’s the way I am. But the same token, by me being private, I respect other people’s privacies.”

I ask Flores, the woman I’d seen go through the scanning process at the Cameron County Detention Center, if the process invaded her privacy. “When you’re in jail, you have no privacy, so you have to do it,” she replies. “If you refuse, it’s just going to go worse for you.” If given the choice, she would have refused the IRIS scan. “Now they have everything about you, even your eyes.” But Lucio doesn’t think anyone in custody—which includes people who have not been convicted of a crime—should have a choice when it comes to IRIS.

Mullin argues that, since, unlike facial recognition, it’s hard to scan a person’s eyes covertly, tools like BI2 Technologies’ are more transparent and ethical. He also says that it does not suffer from the same biases in falsely identifying people of colour that facial recognition does. He is closely following the discussion around regulation of biometrics and AI: “Only technologies that fall within the constitution of both our federal government and the state should be used in any case. And all of these biometric technologies and the people that provide them and the people and the agencies that use them—I believe their intentions [are not] nefarious.”

He said it’s tough to strike the right balance when technology moves so quickly, and he believes human rights advocates play an important role in the debate. “It’s a difficult balancing, of the state legislatures . . . and at the federal level, to say, Okay, where do we draw the line here? Where do we legislate and implement exactly what the appropriate use of technology capabilities are?”

Founded in 1990, the Electronic Frontier Foundation is a non-profit focused on defending civil liberties in the digital world. Saira Hussain, a staff attorney at the EFF, focuses on the intersection of racial justice and surveillance. Often, she says, new technologies are “tested on communities that are more vulnerable before they’re rolled out to the rest of the population.”

Hussain has abundant concerns about the iris-scanning and facial-recognition tools. If people who are arrested are not told of what is going to happen to their biometric data, it raises the question of whether they can meaningfully consent to it being collected. (There have also allegedly been cases in New York in which people have been detained longer for refusing to have their eyes scanned.) And the use of this technology at border checkpoints means it will disproportionately affect racialized travellers and migrants. “It’s going to be individuals who are trying to flee from persecution and come into the United States, taking refuge,” Hussain says, “and so the people who are going to be affected are people of colour.”

Iris scans can be used to not just identify people but track them, Hussain explains. Iris and face recognition could be integrated into CCTV networks—surveillance cameras that are now found everywhere from shopping malls to transit vehicles—to identify a person without their knowledge. The concern is mission creep: once biometric data is gathered, it can be used to identify people in other contexts, and there’s nothing individuals can do to monitor or stop it. (Mullin maintains that, while integrating iris scans with CCTV is theoretically possible, “In reality, it just doesn’t work.”)

“It feels like a new fight, but it’s not a new fight. It’s the same kinds of questions that we’ve been asking ourselves for years, like, Where does power locate itself in society? Who gets to decide what world we want to build? Who gets to participate in these discussions?”

“That’s something we hear again and again in the space of privacy,” Hussain says of the familiar argument that, if you’ve done nothing wrong, you have nothing to worry about. That flies in the face of any justice system that is “premised on the idea that you’re innocent until proven guilty,” she says. “So you’re flipping the equation the other way. You can say the same thing about [a police] agent sitting outside of your house all day every day, tracking your movements. ‘Well, if you don’t have anything to hide, what’s the big deal?’”

Applying for asylum is a process that is enshrined in international law. It allows people fleeing violence, political persecution, or human rights abuses to claim asylum simply by arriving at an international border. The border agency of the country a person arrives at is then supposed to allow them into the country while they present their case to a court and the legal process unfolds.

Although this legal process exists, there are many reasons why asylum seekers may not trust that it will result in a fair outcome, and researchers are learning that the increasing use of AI and biometrics as mechanisms for border control exacerbates this problem. Sam Chambers, a geographer at the University of Arizona, says the surveillance and tracking of migrants makes crossing the border more precarious. “It’s not just about privacy—it’s about life and death there at the border,” he tells me. Chambers explains that the growth of border surveillance, including face and iris recognition, fits into a policy known as “prevention through deterrence,” an Orwellian-sounding term that has existed since the Bill Clinton administration. One example of the policy is the Secure Border Initiative Network, or SBInet, created under George W. Bush and eventually shut down under Barack Obama: the system included sensor towers, radar, long-range cameras, thermal imaging, and motion sensors, all working in concert to detect, analyze, and categorize unauthorized border crossings.

Chambers has published studies demonstrating that SBInet led to a significant increase in migrant deaths in the unrelenting Arizona desert because people were forced to take more dangerous routes to avoid surveillance towers and checkpoints. Between 2002 and 2016, the mortality rate of unauthorized migrants in Pima County grew from about 43 deaths per 100,000 apprehensions to about 220 deaths per 100,000 apprehensions—five times the death rate.

“That’s the way the whole system is set up,” he says. “Even though it’s called prevention through deterrence, the thing is, it’s not really preventing people from crossing, and it’s not deterring people from crossing—they’re just taking more risk to do this. And that’s the case with crossing the river or, in the case of southern Arizona, traversing the Sonoran Desert for an extended period of time.”

While SBInet was cancelled, private companies are innovating the same basic idea to spot more undocumented migrants. For instance, Lattice, an AI system developed by Anduril, a company started by former Facebook employee Palmer Luckey, has erected its sentry towers along the southern US border in Texas and California to recognize “threats,” including people and vehicles, crossing the border.

Chambers disapproves of using innovations like Lattice and IRIS to more quickly identify and deport people. “That’s a whole other reason [for migrants] to stay hidden…. If you had this happen and you have to try crossing again, you’re in a database somewhere, and if there’s some reason you’re found again, they can deport you more easily.”

Actually being granted asylum is rare in the US. According to a data research group at Syracuse University, under Donald Trump’s administration, 69,333 people were placed in Migrant Protection Protocols that kept them waiting in Mexico for asylum; only 615 were granted relief—less than 1 percent. Most migrants aren’t provided with lawyers or translators: many struggle to present their cases in English, and they may not have enough evidence to back up their claim. For all the legitimate concern about the US push, over multiple administrations, to build a border wall and implement inhumane immigration policies including family separation—and Canada’s ongoing use of detention centres to jail migrants—the increased use of AI struggles to gain ground in the conversation. But, for people with legitimate asylum claims, who are often people of colour, the growth of AI and biometrics in border control is yet another factor preventing them from crossing safely. There is comparatively little attention paid to AI and biometric systems we can’t easily see but that, in many ways, are more effective than a wall.

Petra Molnar, a human rights lawyer and associate director of the Refugee Law Lab at York University, is documenting the use of technology to track and control migrants, including drones, automated decision making, AI lie detectors, and biometrics. Last summer, she conducted field research on the island of Lesvos, Greece, the site of one of Europe’s largest refugee camps. “There are all sorts of critiques about [surveillance technology] making the border more like a fortress, and that will likely lead to more deaths along the Mediterranean,” Molnar says. “It’s a proven phenomenon—the more you enforce that border, people will take riskier routes, they will not be rescued, they will drown, and so the fact that we are moving ahead on this technology without having a public conversation about the appropriateness of it, that’s probably for me the most troubling part.”

Molnar says we’re seeing AI and biometrics experimentation in spaces where there is already a lack of oversight and people are unable to exercise their rights. Efforts to counterbalance this are so far scant. The EU has the General Data Protection Regulation, which prevents the use of solely automated decision making, including that based on profiling. Massachusetts recently voted to prohibit facial recognition by law enforcement and public agencies, joining a handful of US cities in banning the tool. Molnar says that, in Canada, there are laws that may govern the use of AI indirectly, such as provincial and federal privacy and data-protection regulations, but these weren’t written with AI specifically in mind, and their scope is unclear at the border.

Crucially, no country will be able to entirely address these issues in isolation. “In terms of a regionalized or even a global set of standards, we need to do a lot more work,” Molnar says. “The governance and regulatory framework is patchy at best, so we’re seeing the tech sector really dominate the conversation in terms of who gets to determine what’s possible, what we want to innovate on, and what we want to see developed.”

She says that, in Canada, there isn’t enough of a conversation about regulation happening, and that’s particularly worrying given that we share a massive land border with the US. She questions how much Canada is willing to stand up for human rights for vulnerable populations crossing the border. “Canada could take a much stronger stand on that, particularly because we always like to present ourselves as a human rights warrior, but then we also want to be a tech leader, and sometimes those things don’t square together.”

In 2018, Molnar co-authored a groundbreaking report, titled Bots at the Gate, that revealed the use of AI in Canada’s immigration system. Produced by the University of Toronto’s International Human Rights Program and the Citizen Lab at the Munk School of Global Affairs and Public Policy, the report exposed the Canadian government’s current use of AI to assess the merits of some visa applications. She filed access to information requests to several federal bodies three years ago and is still waiting for them to turn over records. Agencies and departments can deny records, in full or in part, based on exemptions including national security grounds. “That is one of the key areas of concern for us because, in the existing regulatory framework, there is no mandatory disclosure,” she tells me.

Hussain from the Electronic Frontier Foundation says it’s a similar story in the US, where the use of surveillance at the border remains shrouded in secrecy. “When it comes to ICE and Customs and Border Protection, we have found there’s often an unwillingness to produce documents or that you may have to sue before you actually get to see anything,” she says.

Molnar is up against rich tech giants, slow-moving, opaque bureaucracies, and a largely uninformed and complacent public. “It feels like a new fight, but it’s not a new fight. It’s the same kinds of questions that we’ve been asking ourselves for years, like, Where does power locate itself in society? Who gets to decide what world we want to build? Who gets to participate in these discussions?”

She is particularly worried about financial interests playing a role in determining which systems get implemented in border control and how. Governments rely on private companies to develop and deploy tech to control migration, meaning government liability and accountability are shifted to the private sector, she explains. Thanks to a freedom of information request by migrant-rights group Mijente, she tells me, we now know that tech firm Palantir, founded by Trump supporter Peter Thiel, quietly developed technology to identify undocumented people so ICE could deport them — just one example of the kind of threat she anticipates. “That’s where I get worried, for sure, about whether we will win this fight, or whether it’s even a fight that’s possible to win,” Molnar says. “But I think we have to keep trying because it’s yet another example of how unequal our world is. The promise of technology, the romantic idea of it, was that it would equalize our world, that it would make things more democratic or more accessible, but if anything, we’re seeing broader gaps and less access to power or the ability to benefit from technology.”