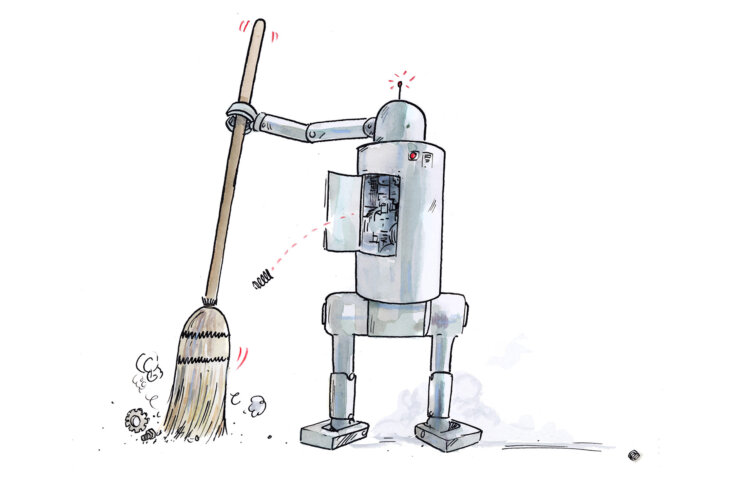

“The Sorceror’s Apprentice,” a ballad the German poet Goethe published in 1798, is about a novice magician who summons a broom to carry water to fill his master’s bath. But because the broom lacks the brains to realize when it has completed the chore, and because the apprentice doesn’t know the spell to stop it, the room is flooded.

The fable has echoes today, when it feels like every human chore is being handed to artificial intelligence. We talk about AI a lot at The Walrus. It’s our job, of course, to reflect on era-altering innovations. In this case, there’s professional interest too. At a time when AI systems can craft credible prose in seconds, what exactly are human editors and writers good for? BuzzFeed is one of the latest media companies to start testing such systems to generate its content. (The move, unsurprisingly, followed a significant reduction in newsroom staff.) Search Engine Land, which tracks digital media trends, called some of the results “hilariously terrible.”

The most recent concerns about AI are focused on the limits of so-called “large language models” that power popular chatbots like ChatGPT. Tech firms are increasingly incorporating LLMs into their products, including search engines. Excitement over the tool’s miraculous powers has been followed by sober second thoughts and, in certain circles, outright panic. CNET was forced to pause the use of AI to produce articles offering financial advice when errors were found in many of the pieces it helped write. To make things worse, LLMs are now filling our digital space with synthetic data—that is, data generated by AI systems or computer simulations rather than collected from real-world sources (which is time consuming and expensive). When you’re dealing with systems prone to perfidy, you have a situation where AI might be poisoning the internet with wrong or misleading content—content chatbots serve up to us every time we ask them a question.

Maybe the nightmare about AI isn’t that it will go rogue and threaten our existence with lethal viruses. Maybe the likely endgame is similar to the enchanted broom—more mundane but no less messy: humanity flooded with bullshit.

I find myself seeing parallels between AI and Patrice Runner, the convicted Canadian scamster who is this issue’s cover story. For a range of fees, Runner promised access to a legendary psychic whose supernatural favours guaranteed good luck, health, and wealth. He claims people were paying for a known deceit. “Maybe it’s not moral, maybe it’s bullshit, but it doesn’t mean it’s fraud,” he told our reporter. Can you imagine an AI spokesperson saying that?

Each new and more powerful iteration of the technology will require a tougher approach, stricter testing, a security review. But the public already has a regulator dedicated to culling falsity from truth. It’s called journalism, and it can do work that LLMs can’t do, such as interviewing people to get killer quotes like the one above. For organizations like The Walrus that have built their identity around quality and accuracy, trust is no longer just a selling point. It’s an existential question facing the public space. Once, disinformation came solely from humans: bad actors wanting an advantage. Now it’s a feature of the machines that run our lives.