Ispend a lot of time peddling my accumulated junk on the virtual garage sale known as Craigslist. Each time I post an item, the website dutifully presents me with its security check, forcing me to decode one of those sequences of squiggly, distorted letters that look like a cross between a Rorschach test and a four-year-old’s signature—a captcha, as computer scientists call them, short for “Completely Automated Public Turing test to tell Computers and Humans Apart.”

The curious thing about Craigslist’s captchas, however, is that instead of testing me with a single sequence of random letters (ujFRuQ, say), the site asks me to decode two words, both in a distinctly old-fashioned (though distorted) font. Since some strange rumours about captchas have been circulating recently—that spammers have set up websites offering free porn as a reward for deciphering captchas, for instance—I wanted to make sure I wasn’t involved in anything shady.

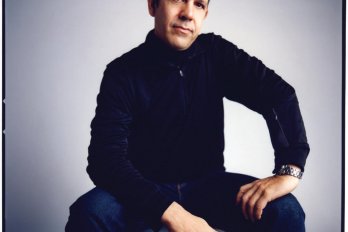

I eventually tracked the Craigslist version back to Luis von Ahn, the thirty-year-old Guatemalan computer scientist who helped develop the first captchas in 2000. Apparently, he had a revelation a few years later while sitting on a plane. “Everybody else in my row was doing crossword puzzles. And right then, I thought, ‘Hmm, this is something computers cannot do,’” he told me. “It turned out that I was actually wrong; computers can do crossword puzzles. But the idea stuck with me.” He realized that he had unwittingly created a system that was frittering away, in ten-second increments, millions of hours of a most precious resource: human brain cycles.

With the help of a MacArthur “genius” grant, von Ahn set out to make amends. Now a growing number of websites, from e-commerce (Ticketmaster) to social networking (Facebook) to blogging (WordPress), have implemented the precocious professor’s new tool, dubbed recaptcha. If you’ve visited those sites, your squiggly-letter-reading ability has been harnessed for a massive project that aims to scan and make freely available every out-of-copyright book in the world, by deciphering words from old texts that have stumped scanning software.

The largest scanning centre in this project, directed by a San Francisco–based non-profit called the Open Content Alliance, occupies a dimly lit corner of the seventh floor of Robarts Library, on the University of Toronto’s downtown campus. The space is filled with twenty-three cubicle-like scanning stations draped on all sides with light-proof black cloth, like rows of coin-operated peep shows.

When the centre opened in 2004, its single robotic scanner used a vacuum suction arm to turn pages automatically. “We ran it into the ground,” recalls coordinator Gabe Juszel, a cheerfully earnest former filmmaker who sports a soul patch. “It was literally smoking by the time we were done with it.” But with the wide variations in book sizes, binding, and condition, they consistently found that they could achieve a higher scanning rate by simply turning off the robotic arm and flipping the pages manually—something else, it seems, that humans are still better at.

Two shifts of dedicated employees keep pages turning from 8:30 in the morning to 11 at night, leavening the monotony by listening to music on their iPods, reading, or (in one particularly talented case) knitting as they go. Two Canon digital SLR cameras mounted in opposing corners of each booth click at an adjustable pre-set interval. Rookies opt for seven seconds, the slowest possible; veterans can scan a page per second.

Juszel had just returned from the OCA’s annual meeting, where it was announced that the number of books available on the group’s Internet Archive had broken the one million mark. U of T is currently adding about 1,500 books a week—and at that rate there’s no need to be choosy about which ones to scan. “It’s a real beast to feed, actually,” says Jonathan Bengtson, the librarian who oversees the university’s role. Entire subject areas are scanned by sorting for pre-1923 works (in accordance with US copyright laws), eliminating duplicates, and taking everything that’s left. Scholars from around the world can also request books for ten cents a page, and typically see them online in less than twenty-four hours.

The most popular Toronto contribution, Juszel reports, is a 1475 edition of St. Augustine’s De civitate Dei, downloaded a baffling 75,911 times (at press time). “Who knew people liked Latin so much?” he says. Toward the other end of the spectrum is a book pulled from the stacks around the same time: the Montreal Philatelist, a monthly journal that ran from 1898 to 1902, which features lurid tales of stamp counterfeiting in Newfoundland.

Once the text is scanned, the file is sent to a server in California, where it’s run through optical character recognition software to produce a digital full-text version. For the newer books, OCR is about 90 percent accurate. But that success rate drops to as low as 60 percent for older texts, which often contain fonts that are blurry and less uniform. These troublesome scans are sent on to the recaptcha servers at Carnegie Mellon University in Pittsburgh.

The program distorts a known word so it will have a way to check that the user is human, and then pairs it up with a word OCR has failed to decipher. Each mystery word is served up in multiple recaptchas, until a consensus about the correct answer emerges. Sometimes a single user confirms the computer’s best guess, but the average is about four users per word. The system is now correcting over 10 million words a day, with 99.1 percent accuracy, von Ahn says.

As innovative as the system is, von Ahn wasn’t the first to harness the power of personal computers around the world. Since the late 1990s, scientists have been recruiting people to download special screen savers that devote spare computing power to projects ranging from the search for extraterrestrial life to climate modelling. The difference with recaptcha is that humans are doing the computing, without necessarily realizing it.

Still, the service is supplied free to any website that wants it, and in addition to helping decipher books scanned for the Internet Archive, recaptcha has been recruited to assist in the digitization of the entire archive of the New York Times back to 1851, which should be completed this year and posted on the Times website. The pursuit of such public goods, von Ahn hopes, will deflect any resentment from the human scanners whose brain cycles he is capturing. “We could do other things, like digitizing cheques,” he notes. “But banks already make enough money.”