TECHNOLOGY / JULY/AUGUST 2024

AI Is a False God

The real threat with super intelligence is falling prey to the hype

BY NAVNEET ALANG

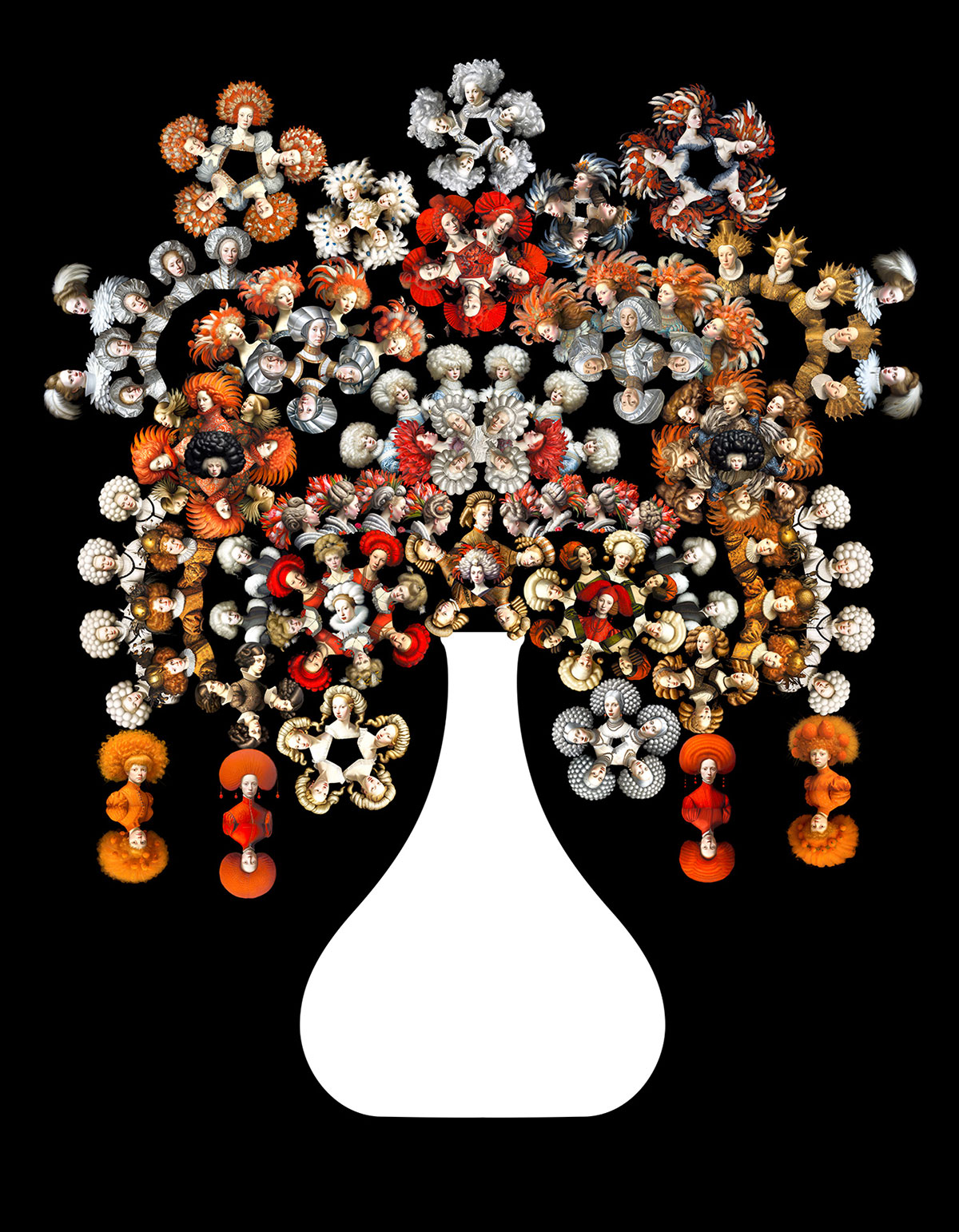

ART BY MARIAN BANTJES AND MIDJOURNEY

Published 6:30, MaY 29, 2024

Fact-based journalism that sparks the Canadian conversation

The real threat with super intelligence is falling prey to the hype

Published 6:30, MaY 29, 2024

In Arthur C. Clarke’s famous short story “The Nine Billion Names of God,” a sect of monks in Tibet believes humanity has a divinely inspired purpose: inscribing all the various names of God. Once the list was complete, they thought, He would bring the universe to an end. Having worked at it by hand for centuries, the monks decide to employ some modern technology. Two skeptical engineers arrive in the Himalayas, powerful computers in tow. Instead of 15,000 years to write out all the permutations of God’s name, the job gets done in three months. As the engineers ride ponies down the mountainside, Clarke’s tale ends with one of literature’s most economical final lines: “Overhead, without any fuss, the stars were going out.”

It is an image of the computer as a shortcut to objectivity or ultimate meaning—which also happens to be, at least part of, what now animates the fascination with artificial intelligence. Though the technologies that underpin AI have existed for some time, it’s only since late 2022, with the emergence of OpenAI’s ChatGPT, that the technology that approached intelligence appeared to be much closer. In a 2023 report by Microsoft Canada, president Chris Barry proclaims that “the era of AI is here, ushering in a transformative wave with potential to touch every facet of our lives,” and that “it is not just a technological advancement; it is a societal shift that is propelling us into a future where innovation takes centre stage.” That is among the more level-headed reactions. Artists and writers are panicking that they will be made obsolete, governments are scrambling to catch up and regulate, and academics are debating furiously.

About the art

The above image, showing what AI is capable of in the hands of an artist, was made by designer Marian Bantjes using Midjourney, a platform that generates images based on text prompts. The composition, made up of twenty images selected from hundreds created over several sessions, depicts AI (symbolized by an otherworldly baby) being worshipped and promising impossible things.

Businesses have been eager to rush aboard the hype train. Some of the world’s largest companies, including Microsoft, Meta, and Alphabet, are throwing their full weight behind AI. On top of the billions spent by big tech, funding for AI start-ups hit nearly $50 billion (US) in 2023. At an event at Stanford University in April, OpenAI CEO Sam Altman said he didn’t really care if the company spent $50 billion a year on AI. Part of his vision is for a kind of super assistant, one that would be a “super-competent colleague that knows absolutely everything about my whole life, every email, every conversation I’ve ever had, but doesn’t feel like an extension.”

But there is also a profound belief that AI represents a threat. Philosopher Nick Bostrom is among the most prominent voices asserting that AI poses an existential risk. As he lays out in his 2014 book Superintelligence: Paths, Dangers, Strategies, if “we build machine brains that surpass human brains in general intelligence . . . the fate of our species would depend on the actions of the machine super-intelligence.” The classic story here is that of an AI system whose only—seemingly inoffensive—goal is making paper clips. According to Bostrom, the system would realize quickly that humans are a barrier to this task, because they might switch off the machine. They might also use up the resources needed for the manufacturing of more paper clips. This is an example of what AI doomers call the “control problem”: the fear that we will lose control of AI because any defences we’ve built into it will be undone by an intelligence that’s millions of steps ahead of us.

Before we do, in fact, cede any more ground to our tech overlords, it’s worth casting one’s mind back to the mid-1990s and the arrival of the World Wide Web. That, too, came with profound assertions of a new utopia, a connected world in which borders, difference, and privation would end. Today, you would be hard pressed to argue that the internet has been some sort of unproblematic good. The fanciful did come true; we can carry the whole world’s knowledge in our pockets. This just had the rather strange effect of driving people a bit mad, fostering discontent and polarization, assisting a renewed surge of the far right, and destabilizing both democracy and truth. It’s not that one should simply resist technology; it can, after all, also have liberating effects. Rather, when big tech comes bearing gifts, you should probably look closely at what’s in the box.

The Walrus is located within the bounds of Treaty 13 signed with the Mississaugas of the Credit. This land is also the traditional territory of the Anishnabeg, the Haudenosaunee, and the Wendat peoples.

© 2022 The Walrus. All Rights Reserved.

Charitable Registration Number: No. 861851624-RR0001

What we call AI at the moment is predominantly focused on LLMs, or large language models. The models are fed massive sets of data—ChatGPT essentially hoovered up the entire public internet—and trained to find patterns in them. Units of meaning, such as words, parts of words, and characters, become tokens and are assigned numerical values. The models learn how tokens relate to other tokens and, over time, learn something like context: where a word might appear, in what order, and so on.

That doesn’t sound impressive on its own. But when I recently asked ChatGPT to write a story about a sentient cloud who was sad the sun was out, the results were surprisingly human. Not only did the chatbot produce the various components of a children’s fable, it also included an arc in which, eventually, “Nimbus” the cloud found a corner of the sky and made peace with a sunny day. You might not call the story good, but it would certainly entertain my five-year-old nephew.

Robin Zebrowski, professor and chair of cognitive science at Beloit College in Wisconsin, explains the humanity I sensed this way: “The only truly linguistic things we’ve ever encountered are things that have minds. And so when we encounter something that looks like it’s doing language the way we do language, all of our priors get pulled in, and we think, ‘Oh, this is clearly a minded thing.’”

This is why, for decades, the standard test for whether technology was approaching intelligence was the Turing test, named after its creator Alan Turing, the British mathematician and Second World War code breaker. The test involves a human interrogator who poses questions to two unseen subjects—a computer and another human—via text-based messages to determine which is the machine. A number of different people play the roles of interrogator and respondent, and if a sufficient proportion of interviewers is fooled, the machine could be said to exhibit intelligence. ChatGPT can already fool at least some people in some situations.

Such tests reveal how closely tied to language our notions of intelligence are. We tend to think that beings that can “do language” are intelligent: we marvel at dogs that appear to understand more complex commands, or gorillas that can communicate in sign language, precisely because it brings us closer to our mechanism of rendering the world sensible.

But being able to do language without also thinking, feeling, willing, or being is probably why writing done by AI chatbots is so lifeless and generic. Because LLMs are essentially looking at massive sets of patterns of data and parsing how they relate to one another, they can often spit out perfectly reasonable-sounding statements that are wrong or nonsensical or just weird. That reduction of language to just collection of data is also why, for example, when I asked ChatGPT to write a bio for me, it told me I was born in India, went to Carleton University, and had a degree in journalism—about which it was wrong on all three counts (it was the UK, York University, and English). To ChatGPT, it was the shape of the answer, expressed confidently, that was more important than the content, the right pattern mattering more than the right response.

All the same, the idea of LLMs as repositories of meaning that are then recombined does align with some assertions from twentieth-century philosophy about the way humans think, experience the world, and create art. French philosopher Jacques Derrida, building on the work of linguist Ferdinand de Saussure, suggested that meaning was differential—the meaning of each word depends on that of other words. Think of a dictionary: the meaning of words can only ever be explained by other words, which in turn can only ever be explained by other words. What is always missing is some sort of “objective” meaning outside of this never-ending chain of signification that brings it to a halt. We are instead forever stuck in this loop of difference. Some, like Russian literary scholar Vladimir Propp, theorized that you could break down folklore narratives into constituent structural elements, as per his seminal work, Morphology of the Folktale. Of course, this doesn’t apply to all narratives, but you can see how you might combine units of a story—a starting action, a crisis, a resolution, and so on—to then create a story about a sentient cloud.

The idea that computers can think quickly appears to become more viable. Raphaël Millière is an assistant professor at Macquarie University in Sydney, Australia, and has spent his career thinking about consciousness and then AI. He describes being part of a large collaborative project called the BIG-bench test. It deliberately presents AI models with challenges beyond their normal capabilities to account for how quickly they “learn.” In the past, the array of tasks meant to test AI’s sophistication included guessing the next move in a chess game, playing a role in a mock trial, and also combining concepts.

If you find yourself asking AI about the meaning of life, it isn’t the answer that’s wrong. It’s the question.

Today, AI can take previously unconnected, even random things, such as the skyline of Toronto and the style of the impressionists, and join them to create what hasn’t existed before. But there is a sort of discomforting or unnerving implication here. Isn’t that also, in a way, how we think? Millière says that, for example, we know what a pet is (a creature we keep with us at home) and we also know what a fish is (an animal that swims in large water bodies); we combine those two in a way that keeps some characteristics and discards others to form a novel concept: a pet fish. Newer AI models boast this capacity to amalgamate into the ostensibly new—and it is precisely why they are called “generative.”

Even comparatively sophisticated arguments can be seen to work this way. The problem of theodicy has been an unending topic of debate amongst theologians for centuries. It asks: If an absolutely good God is omniscient, omnipotent, and omnipresent, how can evil exist when God knows it will happen and can stop it? It radically oversimplifies the theological issue, but theodicy, too, is in some ways a kind of logical puzzle, a pattern of ideas that can be recombined in particular ways. I don’t mean to say that AI can solve our deepest epistemological or philosophical questions, but it does suggest that the line between thinking beings and pattern recognition machines is not quite as hard and bright as we may have hoped.

The sense of there being a thinking thing behind AI chatbots is also driven by the now common wisdom that we don’t know exactly how AI systems work. What’s called the black box problem is often framed as mysticism—the robots are so far ahead or so alien that they are doing something we can’t comprehend. That is true, but not quite in the way it sounds. New York University professor Leif Weatherby suggests that the models are processing so many permutations of data that it is impossible for a single person to wrap their head around it. The mysticism of AI isn’t a hidden or inscrutable mind behind the curtain; it’s to do with scale and brute power.

Yet, even in that distinction that AI is able to do language only through computing power, there is still an interesting question of what it means to think. York University professor Kristin Andrews, who researches animal intelligence, suggests that there are lots of cognitive tasks—remembering how to get food, recognizing objects or other beings—that animals do without necessarily being self-aware. In that sense, intelligence may well be attributed to AI because it can do what we would usually refer to as cognition. But, as Andrews notes, there’s nothing to suggest that AI has an identity or a will or desires.

So much of what produces will and desire is located in the body, not just in the obvious sense of erotic desire but the more complex relation between an interior subjectivity, our unconscious, and how we move as a body through the world, processing information and reacting to it. Zebrowski suggests there is a case to be made that “the body matters for how we can think and why we think and what we think about.” She adds, “It’s not like you can just take a computer program and stick it in the head of a robot and have an embodied thing.” Computers might in fact approach what we call thinking, but they don’t dream, or want, or desire, and this matters more than AI’s proponents let on—not just for why we think but what we end up thinking. When we use our intelligence to craft solutions to economic crises or to tackle racism, we do so out of a sense of morality, of obligation to those around us, our progeny—our cultivated sense that we have a responsibility to make things better in specific, morally significant ways.

Perhaps the model of the computer in Clarke’s story, something that is kind of a shortcut to transcendence or omniscience, is thus the wrong one. Instead, Deep Thought, the computer in Douglas Adams’s The Hitchhiker’s Guide to the Galaxy, might be closer. When asked “the answer to the Ultimate Question of Life, the Universe and Everything,” it of course spits out that famously obtuse answer: “42.”

The absurdist humour was enough on its own, but the ridiculousness of the answer also points to an easily forgotten truth. Life and its meaning can’t be reduced to a simple statement, or to a list of names, just as human thought and feeling can’t be reduced to something articulated by what are ultimately ones and zeros. If you find yourself asking AI about the meaning of life, it isn’t the answer that’s wrong. It’s the question. And at this particular juncture in history, it seems worth wondering what it is about the current moment that has us seeking answers from a benevolent, omniscient digital God—that, it turns out, may be neither of those things.

This March, I spent two days at the Microsoft headquarters, just outside of Seattle. Microsoft is one of the tech incumbents that is most “all in” on AI. To prove just how much, they brought in journalists from around the world to participate in an “Innovation Campus Tour,” which included a dizzying run of talks and demos, meals in the seemingly never-ending supply of on-site restaurants, and a couple of nights in the kind of hotel writers usually cannot afford.

We were walked through a research centre, wearing ear plugs to block out the drone of a mini field of fans. We attended numerous panels: how teams were integrating AI, how those involved with “responsible AI” are tasked with reining in the technology before it goes awry. There was lots of chatter about how this work was the future of everything. In one session, the affable Seth Juarez, principal program manager of AI platforms, spoke of AI as being like moving from a shovel to a tractor: that it will, in his words, “level up humanity.”

We crave things made by humans because we care about what humans say and feel about their experience of being a person.

Some of the things we saw were genuinely inspiring, such as the presentation by Saqib Shaikh, who is blind and has spent years working on SeeingAI. It’s an app that is getting better and better at labelling objects in a field of view in real time. Point it at a desk with a can and it will say, “A red soda can, on a green desk.” Similarly optimistic was the idea that AI could be used to preserve dying languages, more accurately scan for tumours, or more efficiently predict where to deploy disaster response resources—usually by processing large amounts of data and then recognizing and analyzing patterns within it.

Yet for all the high-minded talk of what AI might one day do, much of what artificial intelligence appeared to do best was entirely quotidian: taking financial statements and reconciling figures, making security practices more responsive and efficient, transcribing and summarizing meetings, triaging emails more efficiently. What that emphasis on day-to-day tasks suggested is that AI isn’t so much going to produce a grand new world as, depending on your perspective, make what exists now slightly more efficient—or, rather, intensify and solidify the structure of the present. Yes, some parts of your job might be easier, but what seems likely is that those automated tasks will in turn simply be part of more work.

It’s true that AI’s capacity to process millions of factors at once may in fact vastly outweigh humans’ ability to parse particular kinds of problems, especially those in which the factor at play can be reduced to data. At the end of a panel at Microsoft on AI research, we were each offered a copy of a book titled AI for Good, detailing more altruistic uses. Some of the projects discussed included using machine learning to parse data gathered from tracking wildlife using sound or satellites or to predict where best to put solar panels in India.

That’s encouraging stuff, the sort of thing that, particularly while living in the 2020s, lets one momentarily feel a hint of relief or hope that, maybe, some things are going to get better. But the problems preventing, say, the deployment of solar power in India aren’t simply due to a lack of knowledge. There are instead the issues around resources, will, entrenched interests, and, more plainly, money. This is what the utopian vision of the future so often misses: if and when change happens, the questions at play will be about if and how certain technology gets distributed, deployed, taken up. It will be about how governments decide to allocate resources, how the interests of various parties affected will be balanced, how an idea is sold and promulgated, and more. It will, in short, be about political will, resources, and the contest between competing ideologies and interests. The problems facing Canada or the world—not just climate change but the housing crisis, the toxic drug crisis, or growing anti-immigrant sentiment—aren’t problems caused by a lack of intelligence or computing power. In some cases, the solutions to these problems are superficially simple. Homelessness, for example, is reduced when there are more and cheaper homes. But the fixes are difficult to implement because of social and political forces, not a lack of insight, thinking, or novelty. In other words, what will hold progress on these issues back will ultimately be what holds everything back: us.

The idea of an exponentially greater intelligence, so favoured by big tech, is a strange sort of fantasy that abstracts out intelligence into a kind of superpower that can only ever increase, conceiving of problem solving as a capacity on a dial that can simply be turned up and up. To assume this is what’s called tech solutionism, a term coined a decade ago by Evgeny Morozov, the progressive Belarusian writer who has taken it upon himself to ruthlessly criticize big tech. He was among the first to point to how Silicon Valley tended to see tech as the answer to everything.

Some Silicon Valley businessmen have taken tech solutionism to an extreme. It is these AI accelerationists whose ideas are the most terrifying. Marc Andreessen was intimately involved in the creation of the first web browsers and is now a billionaire venture capitalist who has taken up a mission to fight against the “woke mind virus” and generally embrace capitalism and libertarianism. In a screed published last year, titled “The Techno-Optimist Manifesto,” Andreessen outlined his belief that “there is no material problem—whether created by nature or by technology—that cannot be solved with more technology.” When writer Rick Perlstein attended a dinner at Andreessen’s $34 million (US) home in California, he found a group adamantly opposed to regulation or any kind of constraint on tech (in a tweet at the end of 2023, Andreessen called regulation of AI “the new foundation of totalitarianism”). When Perlstein related the whole experience to a colleague, he “noted a similarity to a student of his who insisted that all the age-old problems historians worried over would soon obviously be solved by better computers, and thus considered the entire humanistic enterprise faintly ridiculous.”

Andreessen’s manifesto also included a perfectly normal, non-threatening section in which he listed off a series of enemies. It included all the usual right-wing bugbears: regulation, know-it-all academics, the constraint on “innovation,” progressives themselves. To the venture capitalist, these are all self-evident evils. Andreessen has been on the board of Facebook/Meta—a company that has allowed mis- and disinformation to wreak havoc on democratic institutions—since 2008. However, he insists, apparently without a trace of irony, that experts are “playing God with everyone else’s lives, with total insulation from the consequences.”

A common understanding of technology is that it is a tool. You have a task you need to do, and tech helps you accomplish it. But there are some significant technologies—shelter, the printing press, the nuclear bomb or the rocket, the internet—that almost “re-render” the world and thus change something about how we conceive of both ourselves and reality. It’s not a mere evolution. After the arrival of the book, and with it the capacity to document complex knowledge and disseminate information outside of the established gatekeepers, the ground of reality itself changed.

AI occupies a strange position, in that it likely represents one of those sea changes in technology but is at the same time overhyped. The idea that AI will lead us to some grand utopia is deeply flawed. Technology does, in fact, turn over new ground, but what was there in soil doesn’t merely go away.

Having spoken to experts, it seems to me as though the promise of AI lies in dealing with sets of data that exist at a scale humans simply cannot operate at. Pattern recognition machines put to use in biology or physics will likely yield fascinating, useful results. AI’s other uses seem more ordinary, at least for now: making work more efficient, streamlining some aspects of content creation, making accessing simple things like travel itineraries or summaries of texts easier.

We are awash with digital detritus, and in response, we seek out a superhuman assistant to draw out what is true.

That isn’t to say AI is some benevolent good, however. An AI model can be trained on billions of data points, but it can’t tell you if any of those things is good, or if it has value to us, and there’s no reason to believe it will. We arrive at moral evaluations not through logical puzzles but through consideration of what is irreducible in us: subjectivity, dignity, interiority, desire—all the things AI doesn’t have.

To say that AI on its own will be able to produce art misunderstands why we turn to the aesthetic in the first place. We crave things made by humans because we care about what humans say and feel about their experience of being a person and a body in the world.

There’s also a question of quantity. In dropping the barriers to content creation, AI will also flood the world with dreck. Already, Google is becoming increasingly unusable because the web is being flooded with AI-crafted content designed to get clicks. There is a mutually constitutive problem here—digital tech has produced a world full of so much data and complexity that, in some cases, we now need tech to sift through it. Whether one considers this cycle vicious or virtuous likely depends on whether you stand to gain from it or if you are left to trudge through the sludge.

Yet it’s also the imbrication of AI into existing systems that is cause for concern. As Damien P. Williams, a professor at the University of North Carolina at Charlotte, pointed out to me, training models absorb masses of data based on what is and what has been. It’s thus hard for them to avoid existing biases, both of the past and the present. Williams points to how, if asked to reproduce, say, a doctor yelling at a nurse, AI will make the doctor a man and the nurse a woman. Last year, when Google hastily released Gemini, its competitor to other AI chatbots, it produced images of “diverse” Nazis and America’s founding fathers. These odd mistakes were a ham-fisted attempt to try and pre-empt the problem of bias in the training data. AI relies on what has been, and trying to account for the myriad ways we encounter and respond to the prejudice of the past appears to simply be beyond its ken.

The structural problem with bias has existed for some time. Algorithms were already used for things like credit scores, and already AI usage in things like hiring is replicating biases. In both cases, pre-existing racial bias emerged in digital systems, and as such, it’s often issues of prejudice, rather than the trope of a rogue AI system launching nukes, that are the problem. That’s not to say that AI won’t kill us, however. More recently, it was revealed that Israel was using a version of AI called Lavender to help it attack targets in Palestine. The system is meant to mark members of Hamas and Palestinian Islamic Jihad and then provide their locations as potential targets for air strikes—including their homes. According to +972 Magazine, many of these attacks killed civilians.

As such, the threat of AI isn’t really that of a machine or system which offhandedly kills humanity. It’s the assumption that AI is in fact intelligent that causes us to outsource an array of social and political functions to computer software—it’s not just the tech itself which becomes integrated into day-to-day life but also the particular logic and ethos of tech and its libertarian-capitalist ideology. The question is then: to what ends AI is deployed, in what context, and with what boundaries. “Can AI be used to make cars drive themselves?” is an interesting question. But whether we should allow self-driving cars on the road, under what conditions, embedded in what systems—or indeed, whether we should deprioritize the car altogether—are the more important questions, and they are ones that an AI system cannot answer for us.

Everything from a tech bro placing his hopes for human advancement on a superhuman intelligence to a military relying on AI software to list targets evinces the same desire for an objective authority figure to which one can turn. When we look to artificial intelligence to make sense of the world—when we ask it questions about reality or history or expect it to represent the world as it is—are we not already bound up in the logic of AI? We are awash with digital detritus, with the cacophony of the present, and in response, we seek out a superhuman assistant to draw out what is true from the morass of the false and the misleading—often to only be misled ourselves when AI gets it wrong.

The driving force behind the desire to see an objective voice where there is none is perhaps that our sense-making apparatuses have already been undermined in ways not unique to AI. The internet has already been a destabilizing force, and AI threatens to make it worse. Talking to University of Vermont professor Todd McGowan, who works on film, philosophy, and psychoanalysis, it clicked for me that our relationship to AI is basically about desire to overcome this destabilization.

We are living in a time where truth is unstable, shifting, constantly in contestation. Think of the embrace of conspiracy theories, the rise of the anti-vax movement, or the mainstreaming of racist pseudoscience. Every age has its great loss—for modernism, it was the coherence of the self; for postmodernism, the stability of master narratives—and now, in the twenty-first century, there is an increasing pressure on the notion of a shared vision of reality. Meanwhile, social figures, from politicians to celebrities to public intellectuals, seem to be subject, more than ever, to the pull of fame, ideological blinkers, and plainly untrue ideas.

What is missing, says McGowan, is what psychoanalytic thinker Jacques Lacan called “the subject supposed to know.” Society is supposed to be filled with those who are supposed to know: teachers, the clergy, leaders, experts, all of whom function as figures of authority who give stability to structures of meaning and ways of thinking. But when the systems that give shape to things start to fade or come under doubt, as has happened to religion, liberalism, democracy, and more, one is left looking for a new God. There is something particularly poignant about the desire to ask ChatGPT to tell us something about a world in which it can occasionally feel like nothing is true. To humans awash with a sea of subjectivity, AI represents the transcendent thing: the impossibly logical mind that can tell us the truth. Lingering at the edges of Clarke’s short story about the Tibetan monks was a similar sense of technology as the thing that lets us exceed our mere mortal constraints.

But the result of that exceeding is the end of everything. In turning to technology to make a deeply spiritual, manual, painstaking task more efficient, Clarke’s characters end up erasing the very act of faith that sustained their journey toward transcendence. But here in the real world, perhaps meeting God isn’t the aim. It’s the torture and the ecstasy of the attempt to do so. Artificial intelligence may keep growing in scope, power, and capability, but the assumptions underlying our faith in it—that, so to speak, it might bring us closer to God—may only lead us further away from Him.

In ten or twenty years from now, AI will undoubtedly be more advanced than it is now. Its problems with inaccuracies or hallucinations or bias likely won’t be solved, but perhaps it will finally write a decent essay. Even so, if I’m lucky enough to be around, I’ll step out of my home with my AI assistant whispering in my ear. There will still be cracks in the sidewalk. The city in which I live will still be under construction. Traffic will probably still be a mess, even if the cars drive themselves. Maybe I’ll look around, or look up at the sky, and my AI assistant will tell me something about what I see. But things will still keep moving on only slightly differently than they do now. And the stars? Against what might now seem like so much change, the sky will still be full of them.

Correction, July 22, 2024: An earlier version of this article referred to a walk-through of a Microsoft data centre. In fact, the walk-through was of the Redmond silicon lab. The Walrus regrets the error.

The O’Hagan Essay on Public Affairs is an annual research-based examination of the current economic, social, and political realities of Canada. Commissioned by the editorial staff at The Walrus, the essay is funded by Peter and Sarah O’Hagan in honour of Peter’s late father, Richard, and his considerable contributions to public life.

The Walrus uses cookies for personalization, to customize its online advertisements, and for other purposes. Learn more or change your cookie preferences.

The events of the last few weeks have been dizzying. We’ve read war plans shared over text messages, heard ongoing threats to Canadian sovereignty, and have felt the ripple effects of axed international aid and public health and immigration changes well beyond our borders. On the cusp of a federal election, our country faces significant questions as to how we should respond to these issues.

At The Walrus, we’re keeping up with all that is happening in Canada, the US, and beyond. Our editors are working hard to bring you fresh insights and reporting every single day on the issues that matter. The Walrus was built for this moment, but we cannot do this alone.

If you enjoyed this article, please make a donation today to help us continue this work. With your support, we can ensure that everyone has access to responsible, fact-based coverage of the very issues that will shape our collective future.

The events of the last few weeks have been dizzying. We’ve read war plans shared over text messages, heard ongoing threats to Canadian sovereignty, and have felt the ripple effects of axed international aid and public health and immigration changes well beyond our borders. On the cusp of a federal election, our country faces significant questions as to how we should respond to these issues.

At The Walrus, we’re keeping up with all that is happening in Canada, the US, and beyond. Our editors are working hard to bring you fresh insights and reporting every single day on the issues that matter. The Walrus was built for this moment, but we cannot do this alone.

If you enjoyed this article, please make a donation today to help us continue this work. With your support, we can ensure that everyone has access to responsible, fact-based coverage of the very issues that will shape our collective future.