Sam Altman, CEO of OpenAI, has ideas about the future. One of them is about how you’ll make money. In short, you won’t necessarily have to, even if your job has been replaced by a powerful artificial intelligence tool. But what will be required for that purported freedom from the drudgery of work is living in a turbo-charged capitalist technocracy. “In the next five years, computer programs that can think will read legal documents and give medical advice,” Altman wrote in a 2021 post called “Moore’s Law for Everything.” In another ten, “they will do assembly-line work and maybe even become companions.” Beyond that time frame, he wrote, “they will do almost everything.” In a world where computers do almost everything, what will humans be up to?

Looking for work, maybe. A recent report from Goldman Sachs estimates that generative AI “could expose the equivalent of 300 million full-time jobs to automation.” And while both Goldman and Altman believe that a lot of new jobs will be created along the way, it’s uncertain how that will look. “With every great technological revolution in human history . . . it has been true that the jobs change a lot, some jobs even go away—and I’m sure we’ll see a lot of that here,” Altman told ABC News in March. Altman has imagined a solution to that problem for good reason: his company might create it.

In November, OpenAI released ChatGPT, a large language model chatbot that can mimic human conversations and written work. This spring, the company unveiled GPT-4, an even more powerful AI program that can do things like explain why a joke is funny or plan a meal by scanning a photo of the inside of someone’s fridge. Meanwhile, other major technology companies like Google and Meta are racing to catch up, sparking a so-called “AI arms race” and, with it, the terror that many of us humans will very quickly be deemed too inefficient to keep around—at work anyway.

Altman’s solution to that problem is universal basic income, or UBI—giving people a guaranteed amount of money on a regular basis to either supplement their wages or to simply live off. “. . . a society that does not offer sufficient equality of opportunity for everyone to advance is not a society that will last,” Altman wrote in his 2021 blog post. Tax policy as we’ve known it will be even less capable of addressing inequalities in the future, he continued. “While people will still have jobs, many of those jobs won’t be ones that create a lot of economic value in the way we think of value today.” He proposed that, in the future—once AI “produces most of the world’s basic goods and services”—a fund could be created by taxing land and capital rather than labour. The dividends from that fund could be distributed to every individual to use as they please—“for better education, healthcare, housing, starting a company, whatever,” Altman wrote.

UBI isn’t new. Forms of it have even been tested, including in Southern Ontario, where (under specific conditions) it produced broadly positive impacts on health and well-being. UBI also gained renewed attention during the COVID-19 pandemic as focus turned to precarious low-wage work, job losses, and emergency government assistance programs. Recently, in the Wall Street Journal and the New York Times, profiles of Altman raised the idea of UBI as a solution to massive job losses, with WSJ noting that Altman’s goal is to “free people to pursue more creative work.” In 2021, Altman was more specific, saying that advanced AI will allow people to “spend more time with people they care about, care for people, appreciate art and nature, or work toward social good.” But recent research and opinions offer a different, less rosy perspective on this UBI-based future.

It might be more useful to think about Altman’s UBI proposal as a solution in search of a problem. The concept helps Altman frame his vision of the future as a fait accompli, with UBI being some kind of end point we’ll inevitably reach. So it’s fair to wonder what the real goal of the UBI canard is for Altman. For one thing, it presupposes a power dynamic that benefits him and other existing tech giants. Second, it eliminates alternative options for a future world.

In reality, UBI may not even be necessary. OpenAI’s recent working paper, which involved researchers from OpenResearch and the University of Pennsylvania, presents a different picture of the future of work. Yes, jobs will be impacted, the paper concluded. Approximately 80 percent of the US workforce “could have at least 10% of their work tasks affected by the introduction of LLMs (large language models),” the research found, with as many as 19 percent of workers having at least 50 percent of their tasks impacted. But how much or how little could depend on the role. If your work is “heavily reliant on science and critical thinking skills,” you might be less exposed than if you do things like programming or writing. In other words, despite economic and labour uncertainty—and the chorus of fear surrounding the current discourse on AI—humans will probably still work quite a bit in the future, just supported by forms of AI like LLMs. Even then, the researchers noted, the pace and scale to which AI is integrated into the economy will depend on the sector and on things like “data availability, regulatory environment, and the distribution of power and interests.”

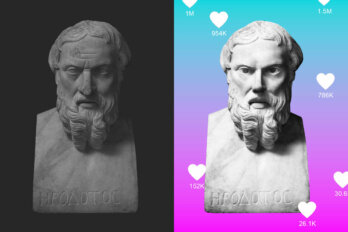

Power and interests are, of course, what is at the heart of the idea of UBI as Altman presented it in 2021—a system in which the masses are merely shareholders in the wealth generated by AI mega corporations. Altman’s description of what UBI could do for us in the future (free up our time to work on art or to socialize) is at odds with the technocratic structure of the world he’s describing—dominated, as it would have to be, theoretically, by a small number of companies generating immense profits. In this scenario, those who still work would, presumably, be subject to significant power imbalances between themselves and their employers, as Australian researcher Lauren Kelly suggests in her 2022 paper on the future of work, published in the Journal of Sociology. In fact, the way Altman describes the future brings to mind something more current: social platform moderators. In these low-wage roles, often performed under reportedly dire conditions, human workers monitor and correct AI programs that are designed to filter out toxic content on social media platforms. From the outside, the AI programs appear automatic, intelligent, and nuanced. In reality, we’re seeing the results of human labour, referred to as “ghost work” because it’s unseen and unheard, hidden behind a veil of techno wonder.

It’s unclear how serious Altman is about creating this UBI-supported future. Though it’s been mentioned in recent interviews, it hasn’t featured significantly in OpenAI’s plans. As it happens, OpenAI’s “capped-profit” structure dictates that profits over a certain amount are owned by its nonprofit entity. When he was asked in January whether OpenAI planned to “take the proceeds that you’re presuming you’re going to make someday and . . . give them back to society,” Altman demurred. Yes, the company could distribute “cash for everyone,” he said. Or “we’re [going to] invest all of this in a non-profit that does a bunch of science.” He didn’t commit either way. The UBI plan will have to wait, it seems.

But we don’t have to wait for Altman to enact his grand vision. Instead, we could demand that the control of AI’s progress be shared more broadly and reflect goals other than those Altman and others articulate. We don’t need to sit by as AI overwhelms our professional or personal lives, hoping that a UBI scheme, offered as some kind of consolation, saves the day. As for the utopian vision where humans have more time to be creative or to spend with their loved ones in a clean, renewable future: why wait for a tech company to grant it to us? As Aaron Benanav, author of Automation and the Future of Work, puts it: “. . . all of this is possible now if we fight for it.”