In late 2019 and early 2020, devastating fires swept across Australia. Widely attributed to the global climate crisis, the fires ravaged property and wildlife and claimed lives. But the crisis also provided an opportunity for climate change denialists to propagate disinformation. Conspiracy theories circulated across social media, claiming the bush fires had been deliberately lit to clear a path for a high-speed railway down Australia’s east coast, that they were the work of Islamic State militants, that they were caused by Chinese billionaires using lasers to map out new construction projects, or that they were caused by ecoterrorists trying to spur action on climate change.

At least one prominent politician bought into it all. Speaking in the UK House of Commons, Foreign and Commonwealth Office minister Heather Wheeler answered a question about the Australian bush fires by stating, “Very regrettably, it is widely reported on social media that 75 percent of the fires were started by arsonists,” prompting a group of UK scientists to write an open letter condemning her for giving legitimacy to a widely debunked claim. Meanwhile, an article in the Australian newspaper claimed that 183 arsonists had been arrested in the “current bush fire season.” Though false, the piece was widely circulated on social media by influencers like Donald Trump Jr. and the right-wing conspiracy outlet InfoWars. “What Australians dealing with the bush fire crisis need is information and support,” said Australian opposition leader Anthony Albanese. “What they don’t need is rampant misinformation and conspiracy theories being peddled by far-right pages and politicians.”

We live in a single global village with numerous shared problems crying out for collective action, from emergencies like COVID-19 to longer-term existential challenges, such as global climate change and nuclear weapons. What harbinger is it for the future when one of the principal means we have to communicate with one another is so heavily distorted in ways that propel confusion and chaos? How is it that, with such awe-inspiring computer power and telecommunications resources at our disposal, we have arrived at such a dismal state?

Scan what people are doing with their devices as they ride your local public transit system and you’ll see many of them swiping and tapping their way through the levels of brain teasers or animated games of skill. These mobile games work by a process known as “variable-rate reinforcement,” in which rewards are delivered in an unpredictable fashion (such as through “loot boxes” containing unknown rewards). Variable-rate reinforcement is effective at shaping a steady increase in desirable behaviour and has effects on the release of dopamine, a hormone that plays a physiological role in reward motivation. All this points to a fundamental mechanism at work in our embrace of social media: they are addiction machines. Companies are not only aware of the addictive and emotionally compelling characteristics of social media, they study and refine them intensively.

Game designers use variable-rate reinforcement to entice players to repeatedly play. While the player is moving through the game, getting addicted, the application learns about the player’s device, interests, and movements, among other factors. Social media platforms, and the devices that connect us to them, work much the same way. They sense when you are disengaged and are programmed with tools to draw you back: little red dots on app icons, banner notifications, the sound of a bell or a vibration, scheduled tips and reminders. The typical mismatch between the actual information we receive after a notification and what we hoped to receive can itself reinforce compulsive behaviour. Take Snapchat, the popular app in which users post and view “disappearing” images and videos. The fleeting nature of the content encourages obsessive checks to the point where users do so for thousands of days on end. The feeds having no defined end point; they maximize anticipation, not reward.

It’s not just the platforms that zero in on these mechanisms of addiction; digital marketers, data brokers, psychology consultants, and behavioural-modification firms do as well. Those interested in designing more addictive offerings can attend professional conferences (like the Traffic and Conversion Summit) where colleagues share best practices on how to game the platform’s ad and content moderation systems in their clients’ favour. The last decade has been spent monitoring, analyzing, and “actioning” trillions of bits of data points on consumers’ interests and behaviours. It is a short pivot, but one with ominous consequences, to turn the power of this vast apparatus away from consumer interests and toward nefarious political ends.

The appetite to retain “eyeballs,” for example, is clearly having an impact on public discourse. Consider the most basic problem: information overload. According to Twitter’s own statistics, “Every second, on average, around 6,000 tweets are tweeted on Twitter, which corresponds to over 350,000 tweets sent per minute, 500 million tweets per day and around 200 billion tweets per year.” On average, 1.47 billion people log on to Facebook daily, and five new Facebook profiles are created every second. Facebook videos are viewed 8 billion times per day. Every minute, more than 3.87 million Google searches are conducted, and seven new articles are added to Wikipedia. The fact that we carry with us devices that are always on and connected exacerbates the overload. The vast amounts of information produced by individuals, by businesses, and by machines operating autonomously, twenty-four hours a day, seven days a week, is unleashing a swelling real-time tsunami of data.

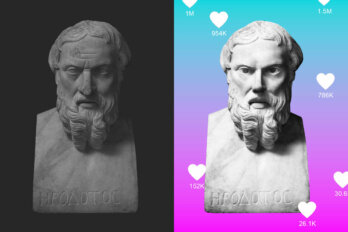

While it is certainly true that the volume of information expanded rapidly in prior eras (e.g., the advent of mechanized printing), what makes our communications ecosystem different is that the overall system is designed to both push that information in front of us and simultaneously entice us, through addictive triggers, to stay connected to it. And this flood of content amplifies cognitive biases in ways that allow false information to root itself in the public consciousness. Social psychology experiments have demonstrated that repeat exposure to information increases the likelihood that observers will believe that information, even if it is false. It might help explain why Donald Trump’s firehose of obvious Twitter lies about “witch hunts” and “fake news” reinforces (rather than drives away) his diehard supporters.

Just as mosquitoes breed in a humid climate with lots of standing water, those who seek to foment chaos, mistrust, and fear as smokescreens for their corruption are flourishing in a communications era biased toward sensationalistic, emotional, and divisive content. As a consequence, we are seeing an explosion of social media–enabled disinformation “influence” operations—some open, commercial, and seemingly above board, but many others serving an underworld of autocrats, despots, and unethical business executives. “The new information environment,” writes Herb Lin, senior research scholar for cyber policy and security at Stanford University, “provides the 21st century information warrior with cyber-enabled capabilities that Hitler, Stalin, Goebbels, and McCarthy could have only imagined.”

Probably the most well-known provider of these new types of commercial psy-op services is Cambridge Analytica, thanks to its prominent and controversial role in Brexit and the 2016 US presidential election, as exposed in the documentary The Great Hack. Cambridge Analytica itself was destroyed by its own sloppy hubris and the consciences of a few whistleblowers. But countless other companies like it roll on, following variations on the same successful model.

BuzzFeed News discovered that, “since 2011, at least 27 online information operations have been partially or wholly attributed to PR or marketing firms. Of those, 19 occurred in 2019 alone.” Its investigation profiled the Archimedes Group, an Israeli PR firm that boasts it can “use every tool and take every advantage available in order to change reality according to our client’s wishes.” Among its clients are individuals and organizations in states with histories of sectarian violence, including Mali, Tunisia, and Nigeria. In the case of Nigeria, the company ran both supportive and oppositional campaigns against a single candidate, former vice-president Atiku Abubakar. (It was speculated that the supportive campaign was meant to identify his supporters so that they could then be targeted with anti-Abubakar messaging.)

Not surprisingly, those inclined to authoritarianism around the world are actively taking advantage of the already propitious environment that social media presents for them. A recent survey by the Oxford Internet Institute found that forty-eight countries have at least one government agency or political party engaged in shaping public opinion through social media. Leaders like Donald Trump, Russia’s Vladimir Putin, and Turkey’s Recep Tayyip Erdoğan lambaste “fake news” while simultaneously pushing—often shamelessly—blatant falsehoods. Supporting their efforts is a growing number of companies, ranging from sketchy startups to massive consulting ventures, all of which stand to make millions engineering consent, undermining adversaries, or simply seeding dissent. We are, as a consequence, witnessing a dangerous blending of the ancient arts of propaganda, advertising, and psychological operations with the machinery and power of AI and big data.

The Philippines is a good case study of what is likely to become more common across the global South. As is often the case with developing countries, traditional internet connectivity is still a challenge for many Filipinos, but cheap mobile devices and free social media accounts are growing in leaps and bounds. As a consequence, Filipinos principally get their news and information from Facebook and other social media platforms, prioritizing headlines they skim through over the lengthier content that accompanies them and fostering the types of cognitive biases outlined earlier.

Meanwhile, dozens of sketchy PR companies have sprouted up, openly advertising disinformation services that are barely disguised as benign-sounding “identity management” or “issue framing.” Political campaigns routinely make use of these consulting services, which in turn pay college students thousands of dollars a month to set up fake Facebook groups designed to look like genuine grassroots campaigns and used to spread false, incriminatory information. The situation has devolved to the point where Facebook’s public policy director for global elections said the Philippines was “patient zero in the global information epidemic.” Spearheading it all is the country’s right-wing president, Rodrigo Duterte, who has been widely criticized for harnessing Facebook to undermine and demonize political opposition and independent journalists and to fuel a lethal drug war. His organizers marshalled an army of cybertrolls, called Diehard Duterte Supporters or DDS, that openly mocked opposition leaders and threatened female candidates and journalists with rape and death.

The rabid social media–fuelled disinformation wars are degrading the political environment in the Philippines, but since virtually everyone in a position of power has bought into them, there is little will or incentive to do anything about them. Disinformation operations are run with impunity. PR startups are thriving while everyone else is mildly entertained, confused, or frightened by the spectacle of it all. As the Washington Post summed it up, “Across the Philippines, it’s a virtual free-for-all. Trolls for companies. Trolls for celebrities. Trolls for liberal opposition politicians and the government. Trolls trolling trolls.” The Post report, which profiles numerous companies paying trolls to spread disinformation, also warns that what is happening in the Philippines will likely find its way abroad. “The same young, educated, English-speaking workforce that made the Philippines a global call center and content moderation hub” will likely help the internationalization of the Philippines’s shady PR firms, which will find willing clients in other countries’ domestic battles.

The story of the Philippines could be told more or less in similar terms the world over: in sub-Saharan Africa; throughout Southeast Asia, India and Pakistan, and Central Asia; spread across Central and South America; across the Middle East, the Gulf, and North Africa—the same dynamics are playing out. In Indonesia, military personnel coordinate disinformation campaigns that include dozens of websites and social media accounts, whose operators are paid a fee by the military and whose posts routinely heap praise on the Indonesian army’s suppression of separatist movements in Papua. Taiwan is a petri dish of disinformation. According to the New York Times, “So many rumors and falsehoods circulate on Taiwanese social media that it can be hard to tell whether they originate in Taiwan or in China, and whether they are the work of private provocateurs or of state agents.” In India, racist disinformation on WhatsApp groups and other social media platforms has incited mass outbreaks of ethnic violence, including the horrific “Delhi Riots” in February, during which mostly Hindu mobs attacked Muslims, leading to dozens of deaths.

Governments are not the only ones exploiting social media psy-op experiments. Big corporations are getting into the game too. Perhaps the most alarming are the corporate disinformation campaigns around climate change. Big oil, chemical, and other extractive-industry companies have hired private investigators to dig up dirt on lawyers, investigative journalists, and advocacy organizations. They’ve employed hackers-for-hire to target NGOs and their funders and leak the details of their strategic planning to media outlets on corporate payrolls. They have built entire organizations, think tanks, and other front groups to generate scientific-looking but implausible reports to distract audiences from the real science of climate change.

Individual users may not have the expertise, tools, or time to verify claims. Meanwhile, fresh scandals continuously rain down on them, confusing fact and fiction. Worse yet, a 2015 study by MIT political scientist Adam Berinsky found that “attempting to quash rumors through direct refutation may facilitate their diffusion by increasing fluency.” In other words, efforts to correct falsehoods can ironically contribute to their further propagation and even acceptance. Citizens become fatigued trying to discern objective truth as the flood of news washes over them, hour by hour. Questioning the integrity of all media can in turn lead to fatalism, cynicism, and eventually paralysis. In the words of social media expert Ethan Zuckerman, “A plurality of unreality does not persuade the listener of one set of facts or another but encourages the listener to doubt everything.”

Contributing to this problem are social media companies who appear either unwilling or unable to weed out malicious or false information. That’s not to say the companies are sitting idle. They are feeling the pressure to react and have taken several noteworthy steps to combat the plague of disinformation, including shutting down inauthentic accounts by the thousands, hiring more personnel to screen posts and investigate malpractice on their platforms, “down-ranking” clearly false information on users’ feeds, and collaborating with fact-checking and other research organizations to spot disinformation. These efforts intensified during the pandemic.

But, in spite of these measures, social media remain polluted by misinformation and disinformation not only because of their own internal mechanisms, which privilege sensational content, or because of the speed and volume of posts, but also due to their seeming ignorance of the malicious actors who seek to game them. For example, despite widespread revelations of Russian influence operations over social media in 2016, two years later, researchers posing as Russian trolls were still able to buy political ads on Google, even paying in Russian currency, registering from a Russian zip code, and using indicators linking their advertisements to the Internet Research Agency—the very trolling farm that had been the subject of intense congressional scrutiny. In similar fashion, despite all of the promises made to control misinformation about COVID-19 on its platform, an April study by The Markup found that Facebook was nonetheless still allowing advertisers to target users the company believes are interested in “pseudoscience”—a category of roughly 78 million people.

In testimony before the US Congress, Facebook CEO Sheryl Sandberg made a startling admission: from October to March 2018, Facebook deleted 1.3 billion fake accounts. In July 2018, Twitter was deleting on the order of a million accounts a day and had deleted 70 million fake Twitter accounts between May and June of that year alone. The revelations caused Twitter’s share price to drop by 8 percent—a good indication of the type of business disincentives at work in the industry. Put simply, removing accounts and cleaning up your platform is also bad for business.

Social media’s inability to track inauthentic behaviour seems destined to get far worse before it gets better, as malicious actors are now using altered images and videos, called “deep fakes,” as well as large WhatsApp groups to spread disinformation virally. These techniques are far more difficult for the platforms to combat and will almost certainly become a staple of discrediting and blackmail campaigns in the political realm. But, for all the deletions, fact-checking, and monitoring systems they produce, social media will remain easy to exploit as tools of disinformation for as long as gathering subscribers is at the heart of their business model.

Excerpted in part from Reset: Reclaiming the Internet for Civil Society by Ronald Deibert. ©2020 Ronald J. Deibert and the Canadian Broadcasting Corporation. Published by House of Anansi Press (houseofanansi.com).