In 2011, Boeing announced it was going to update its fleet of 737s, the bestselling commercial jetliner in history. The company’s archrival, Airbus, released a version of its popular twin-engine A320 jet that promised up to 20 percent less fuel consumption—making it a direct threat to Boeing’s workhorse 737, which first flew in 1967. But a redesign could cost billions of dollars and require airlines to retrain pilots and maintenance operators. Boeing picked a cheaper option: replacing the old engines with a larger and more fuel-efficient version. It was a decision that culminated in the Ethiopian Airlines crash on March 10 that killed 157 people.

The Chicago-based aviation company ran into a series of problems in implementing its upgrade. The larger diameter of the new engine forced Boeing engineers to move the engines slightly forward and higher on the wing. This change left the plane with a dangerous tendency to pitch upward and stall. “Obviously, this is a bad news scenario,” says Mike Doiron, president of Doiron Aviation Consulting. “To offset that and help certify the airplane, Boeing resorted to sensors.”

The sensors in the front of the plane relayed information to an automated system known as MCAS (Maneuvering Characteristics Augmented System). If the MCAS caught the airplane pitching up, the system would automatically push the nose down and stabilize the plane—much like the new driving-assistance system in cars that steer you back when you begin to drift out of the lane. But this fix led to yet another problem: the sensors tended to malfunction, causing MCAS to push the plane downward, even when it wasn’t pitching up.

The glitch is suspected to be responsible for the Ethiopian Airlines crash as well as the Lion Air crash in Indonesia last October that killed 189 people. “From my understanding, the airplane kept pushing the nose down and the flight crew kept pulling it back,” says Doiron. “Chances are, the airplane thought they were going to stall again and pushed even further down.” The dual disasters in swift succession forced airline regulators around the world to ground the aircraft (371 were in use).

Automation systems on planes have come a long way, explains Doiron, doing everything from steering to landing an aircraft. They have helped airplanes become safer, by eliminating chances of human error, and more efficient, by using less fuel which lowers the carbon footprint of flying. “However, for every bit of good news, sometimes, there’s a little bit of bad news,” says Doiron. The bad news is pilots are denied the opportunity to routinely fly the plane hands on and aren’t given adequate training on what do when these sophisticated automated systems fail.

This problem is known as the paradox of automation. The better and more efficient an automated system, the less practice a human operator gets, lowering their skills and ability to respond to a challenging situation.

“Automation is becoming so complex that airlines sometimes have to decide between training pilots on physically flying the plane or running the automation,” says Doiron. Bloomberg reported that, the day before the Lion Air crash, pilots flying the same Boeing Max 8 plane experienced difficulties with the MCAS but managed to turn it off, potentially saving everyone on board. This was a remarkable feat considering that Boeing failed to even include its new MCAS system in the operations manual for the plane. Investigators only turned their attention to the system in the wake of the tragedy when a different set of pilots were unable to turn it off, and the plane crashed into the Java Sea. According to the Wall Street Journal, Boeing “had decided against disclosing more details to cockpit crews due to concerns about inundating average pilots with too much information—and significantly more technical data—than they needed or could digest.”

The paradox, and its sometimes catastrophic consequences, extends to other areas where automation prevents human operators from making urgent interventions. In a handful of fatal crashes involving Tesla’s Autopilot—a semiautonomous system released in 2014 that uses sensors, cameras, and software to drive the car on highways—investigations found that drivers failed to take over in time to avert the accident. So far, three people have died while driving with Autopilot engaged. “It’s probably going to get worse before it gets better,” warns Doiron. He’s talking about the transportation industry, but the warning could soon extend to nearly every part of our automated lives.

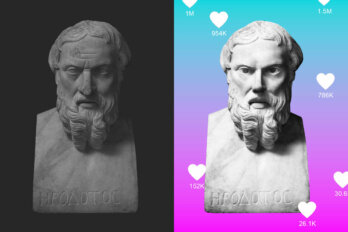

The automation paradox is all around us; from Facebook’s algorithms, which replaced human editors, to digital assistants like Siri. The paradox is at work when we lose the ability to remember phone numbers or do mental math like we used to because our phones do it for us. Or when we sometimes become bewildered when presented with a physical map because Google Maps and the creeping blue dot no longer require us to remember street names or directions.

In many ways, automation has been a boon. In health care, robot-assisted surgeries allow doctors to perform complicated procedures with greater precision that are minimally invasive and have shorter recovery times. The first robot-assisted liver resection at the University of Utah Health allowed the patient to go home the same day, instead of staying at the hospital for a week. Even here, the paradox comes into play: a recent study by the University of California found that robot-assisted surgery may be limiting the amount of practice surgical trainees receive, leaving many new surgeons unable to perform surgery independently. While traditional open-surgery methods were effective in teaching trainees, the study concluded that automated surgery techniques led to practices that resulted in only a “minority of robotic surgical trainees to come to competence.”

Tim Harford, in his book Messy: The Power of Disorder to Transform Our Lives, divides the paradox into three strands. “First, automatic systems accommodate incompetence by being easy to operate and by automatically correcting mistakes,” he writes, in an adaptation published in the Guardian. “Because of this, an inexpert operator can function for a long time before his lack of skill becomes apparent—his incompetence is a hidden weakness that can persist almost indefinitely.”

Secondly, even if the operator is highly skilled, automated systems mean that the operator is denied the ability to practise their skill, gradually diminishing their expertise. Lastly, Harford believes these systems only tend to fail in extraordinary circumstances, requiring “a particularly skilful response,” which the operator either has lost or never gained due to lack of practice.

Doiron, who has been in the aviation industry for over forty-five years, explains that automated systems are not new, but flight crews used to be better prepared when the system failed. “You had a checklist that you ran,” he says. “You did step one, and if that didn’t work, you did step two, then step three, and so forth, and eventually you got to the point where you’re actually pulling the circuit breaker on the system to basically shut it down altogether.” These checklist systems are still in use, but the technology has started to outpace the training.

With planes largely flying themselves, the overall experience level in the cockpit has decreased. “They do hand fly the plane whenever they get the opportunity,” says Doiron. “But it’s still not the same as it was fifty years ago when pilots hand flew the airplane all the time.” As a result, some pilots’ handling skills can get rusty. To counter this, pilots are encouraged to turn off the autopilot from time to time and practise flying the plane manually. The only problem is, if pilots turn off the autopilot only when it’s safe, they never get to practise handling the plane in a challenging situation.

Google, with its self-driving cars, takes a different approach to the paradox problem: it removes humans from the equation entirely. In 2009, the company was retrofitting Toyotas and Lexuses with automated systems that would drive the car, but by 2014, it was manufacturing two-seater cars with its own design: no steering wheel, no gas or brake pedals.

“If you have a steering wheel, there’s an implicit expectation that you’re going to do something with it,” says Chris Urmson, former head of Google’s self-driving-car project, in an interview on the podcast 99% Invisible. If a Google car encounters a technical issue, it pulls over, and a replacement car will come by to pick up the passengers, leaving the faulty car for technicians to repair. In Urmson’s view, a safe car is one without a human driver, which is probably true, considering that human error is behind 90 percent of road accidents, which kill 1.35 million people every year.

But handing over driving to an autonomous car raises important questions about security and ethics. In 2015, US researchers remotely hijacked a Jeep Cherokee’s system, interfering with the radio, windshield wipers, and air conditioning, and ultimately cut the transmission while the car was speeding down a highway. The experiment exposed vulnerabilities in the automated system, forcing the company to recall 1.4 million vehicles. A self-driving car will also face ethical dilemmas—for example, whether to hit a pregnant woman or swerve into a divider, potentially killing the two passengers in the car. A human might make a moral choice, choosing to shift the danger to themselves to save a stranger. But programming a universal moral code into a car is difficult, given the global variation in ethics.

Catherine Burns, a systems design engineer and director of the Advanced Interface Design Lab at the University of Waterloo, suggests a middle path that combines the best of humans and machines in an approach that engineers call human-centred automation. “I’m talking about automation where the human and the automation are partners,” says Burns. “We want the automation to be augmenting the humans, not replacing the human and not relying on the human to only do the hard part of the work.”

A human-first approach, for Burns, means recognizing irreplaceable human skills—like complex decision-making, emotional intelligence, and creativity—and building automation to support them. This approach would mean rigorous training for human operators to help them prepare for exceptional situations—or what are sometimes called “edge cases.” (Fire drills, for example, were developed to help reduce panic in case of an emergency and identify any problems in exit strategy without the threat of a real fire.) Human-centred systems are already implemented in nuclear power plants, where operators are routinely required to perform manual tasks, regardless of the automation at the plant. “They check certain things, turn something on, bring down a system just to bring it up again. The whole point is to try and keep up those skills,” says Burns. “It’s a deliberate effort.”

Netflix, whose algorithms curate our weekend binges, unveiled software in 2011 called Chaos Monkey that would randomly shut down different services in its system, forcing engineers to adapt accordingly. Seeing how the system behaved in abnormal conditions allowed engineers to modify the system to easily handle future unplanned issues and build resiliency within the system.

“If we aren’t constantly testing our ability to succeed despite failure, then it isn’t likely to work when it matters most—in the event of an unexpected outage,” wrote John Ciancutti, a former engineer at Netflix, in a blog post on Medium. “The best way to avoid failure is to fail constantly.”

Flight simulators are the Chaos Monkey of the aviation industry, and flight operators are required to do simulator training every year, but the type and duration of training is often left up to the individual airline operators. More importantly, some automated systems are so ingrained within the plane—the first autopilot system to ensure the plane would fly straight and level was introduced in 1912—that they are built into the simulators. Pilots have come to trust these systems in the same way we have come to trust computers. “We assume that the computer is always right, and when someone says the computer made a mistake, we assume they are wrong or lying,” writes Harford.

Removing automated systems from our lives, or rejecting them altogether, is now impossible. And, overall, such systems do increase safety and productivity, but they aren’t infallible, and when they make a mistake, however rare, the repercussions can be severe. Recognizing this goes beyond providing a software update to a shoddily redesigned plane. At a time when automation is widely regarded to be stealing jobs, the paradox tells us that the role of humans is even more critical.